Authors:

(1) Xian Liu, Snap Inc., CUHK with Work done during an internship at Snap Inc.;

(2) Jian Ren, Snap Inc. with Corresponding author: [email protected];

(3) Aliaksandr Siarohin, Snap Inc.;

(4) Ivan Skorokhodov, Snap Inc.;

(5) Yanyu Li, Snap Inc.;

(6) Dahua Lin, CUHK;

(7) Xihui Liu, HKU;

(8) Ziwei Liu, NTU;

(9) Sergey Tulyakov, Snap Inc.

Table of Links

3 Our Approach and 3.1 Preliminaries and Problem Setting

3.2 Latent Structural Diffusion Model

A Appendix and A.1 Additional Quantitative Results

A.2 More Implementation Details and A.3 More Ablation Study Results

A.5 Impact of Random Seed and Model Robustness and A.6 Boarder Impact and Ethical Consideration

A.7 More Comparison Results and A.8 Additional Qualitative Results

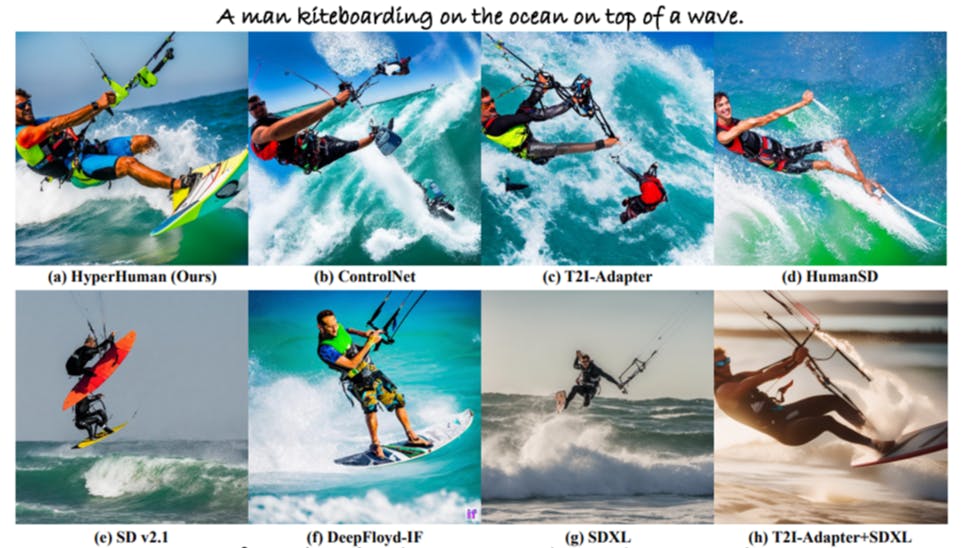

3 OUR APPROACH

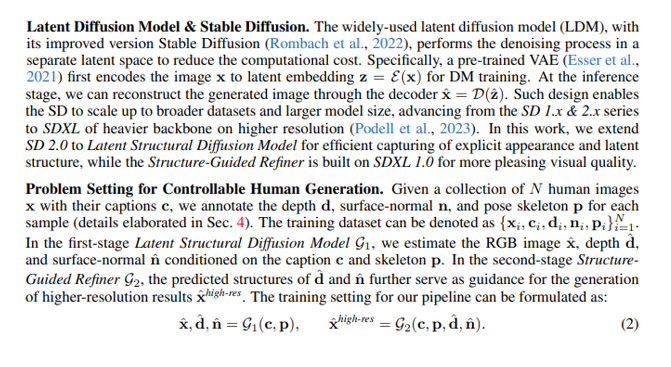

We present HyperHuman that generates in-the-wild human images of high realism and diverse layouts. The overall framework is illustrated in Fig. 2. To make the content self-contained and narration clearer, we first introduce some pre-requisites of diffusion models and the problem setting in Sec. 3.1. Then, we present the Latent Structural Diffusion Model which simultaneously denoises the depth, surface-normal along with the RGB image. The explicit appearance and latent structure are thus jointly learned in a unified model (Sec. 3.2). Finally, we elaborate the Structure-Guided Refiner to compose the predicted conditions for detailed generation of higher resolution in Sec. 3.3.

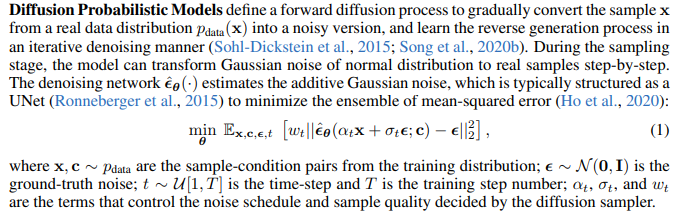

3.1 PRELIMINARIES AND PROBLEM SETTING

During inference, only the text prompt and body skeleton are needed to synthesize well-aligned RGB image, depth, and surface-normal. Note that the users are free to substitute their own depth and surface-normal conditions to G2 if applicable, enabling more flexible and controllable generation

This paper is available on arxiv under CC BY 4.0 DEED license.