Authors:

(1) Xian Liu, Snap Inc., CUHK with Work done during an internship at Snap Inc.;

(2) Jian Ren, Snap Inc. with Corresponding author: [email protected];

(3) Aliaksandr Siarohin, Snap Inc.;

(4) Ivan Skorokhodov, Snap Inc.;

(5) Yanyu Li, Snap Inc.;

(6) Dahua Lin, CUHK;

(7) Xihui Liu, HKU;

(8) Ziwei Liu, NTU;

(9) Sergey Tulyakov, Snap Inc.

Table of Links

3 Our Approach and 3.1 Preliminaries and Problem Setting

3.2 Latent Structural Diffusion Model

A Appendix and A.1 Additional Quantitative Results

A.2 More Implementation Details and A.3 More Ablation Study Results

A.5 Impact of Random Seed and Model Robustness and A.6 Boarder Impact and Ethical Consideration

A.7 More Comparison Results and A.8 Additional Qualitative Results

3.2 LATENT STRUCTURAL DIFFUSION MODEL

To incorporate the body skeletons for pose control, the simplest way is by feature residual (Mou et al., 2023) or input concatenation (Ju et al., 2023b). However, three problems remain: 1) The sparse keypoints only depict the coarse human structure, while the fine-grained geometry and foregroundbackground relationship are ignored. Besides, the naive DM training is merely supervised by RGB signals, which fails to capture the inherent structural information. 2) The image RGB and structure representations are spatially aligned but substantially different in latent space. How to jointly model them remains challenging. 3) In contrast to the colorful RGB images, the structure maps are mostly monotonous with similar values in local regions, which are hard to learn by DMs (Lin et al., 2023).

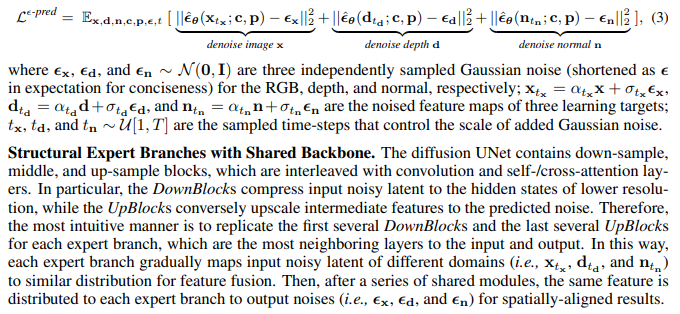

Unified Model for Simultaneous Denoising. Our solution to the first problem is to simultaneously denoise the depth and surface-normal along with the synthesized RGB image. We choose them as additional learning targets due to two reasons: 1) Depth and normal can be easily annotated for large-scale dataset, which are also used in recent controllable T2I generation (Zhang & Agrawala, 2023). 2) As two commonly-used structural guidance, they complement the spatial relationship and geometry information, where the depth (Deng et al., 2022), normal (Wang et al., 2022), or both (Yu et al., 2022b) are proven beneficial in recent 3D studies. To this end, a naive method is to train three separate networks to denoise the RGB, depth, and normal individually. But the spatial alignment between them is hard to preserve. Therefore, we propose to capture the joint distribution in a unified model by simultaneous denoising, which can be trained with simplified objective (Ho et al., 2020):

Furthermore, we find that the number of shared modules can trade-off between the spatial alignment and distribution learning: On the one hand, more shared layers guarantee the more similar features of final output, leading to the paired texture and structure corresponding to the same image. On the other hand, the RGB, depth, and normal can be treated as different views of the same image, where predicting them from the same feature resembles an image-to-image translation task in essence. Empirically, we find the optimal design to replicate the conv_in, first DownBlock, last UpBlock, and conv_out for each expert branch, where each branch’s skip-connections are maintained separately (as depicted in Fig. 2). This yields both the spatial alignment and joint capture of image texture and structure. Note that such design is not limited to three targets, but can generalize to arbitrary number of paired distributions by simply involving more branches with little computation overhead.

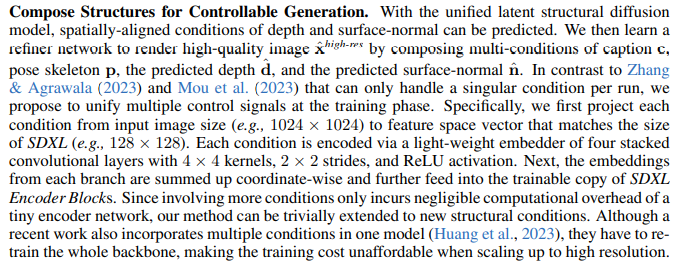

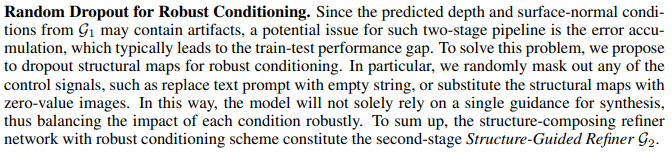

3.3 STRUCTURE-GUIDED REFINER

This paper is available on arxiv under CC BY 4.0 DEED license.